Want to know what people think of your sales efforts? Why not put them to the test? Or better still, do split tests!

We all know that in sales, results are everything. When you’re creating emails, call-to-actions or sales email subject lines, it’s the right combination of words that will determine your end results. But how do you know what your audience finds most appealing?

Split tests, or A/B tests, are the solution!

We’ve been in sales for over five years, and we’ve run A/B tests more times than we can count or remember. It’s from this experience that knowledge is born, and that’s why we present you with a guide to split testing that will help you get the results you want, and make informed decisions about your next sales action. If not done properly, A/B split testing can produce misleading results. So, if you’re feeling stuck in the A/B testing puzzle and you work in sales, you’ll find the following in this article:

Let’s go !

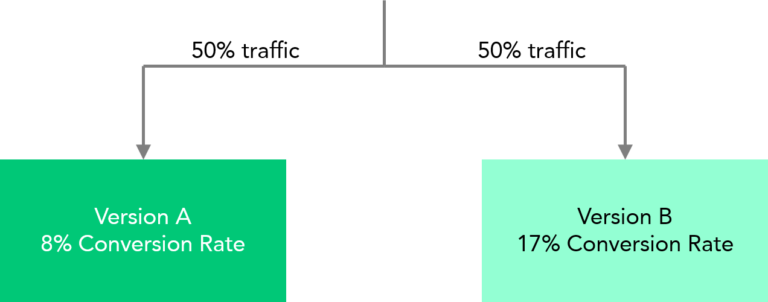

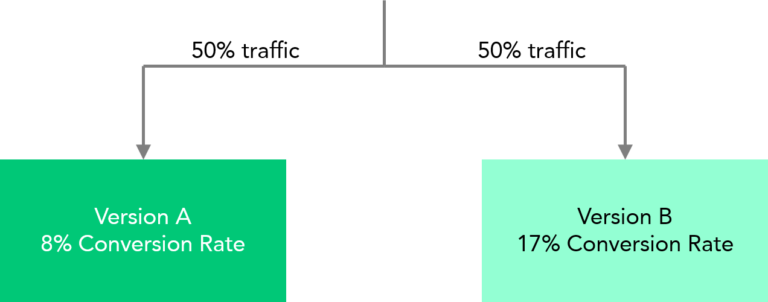

By definition, shared testing, commonly known as A/B testing, is a method of simultaneously sending different versions of a piece of content to a shared audience, with the aim of identifying the most effective version that elicits the desired actions.

This approach is crucial for tasks such as writing sales copy, sending invitations to connect on LinkedIn, testing follow-up strategies, and more, as it helps make data-driven decisions while saving money, time and potential leads.

In sales, split-testing compares two versions of a sales pitch to determine which delivers the best results, thereby increasing conversions and sales results. When it comes to prospecting, you can test your approach across all communication channels. What’s more, you can even test different elements to determine the most effective approach to lead generation and prospecting.

One of the most interesting aspects of pay-as-you-go testing is its simplicity. It’s easy and straightforward, and if done correctly, an A/B test can significantly improve your sales efforts. What’s more, using the right tool can help save time by partially automating the process for even better results, but we’ll come back to that later in this blog.

Almost anything can be A/B tested. For example, in LeadIn, our LinkedIn automation tool and cold email software, you can test up to five variations of :

In addition, you can test smaller factors in these messages, such as message text, subject lines, message body, signature, links or calls to action.

Note: in our experience, we advise you not to test everything at the same time. Why ? Because you won’t be able to determine what influenced the results. It’s best to test one element at a time. In addition, be sure to continually refine and retest options that have proven successful.

By carrying out A/B tests on different elements of a campaign or website, sales people can see what works best when the aim is to convert prospects into customers. By testing several approaches and choosing the most effective, you can achieve higher conversion rates and a better lead generation strategy.

Why wonder and guess when you can be sure of what you know? Split testing provides the kind of data-driven certainty that helps you make informed decisions, because numbers don’t lie.

If we use split-test data to better understand our target audience, you can easily give your potential customers what they want, strengthening relationships and making their experience more user-friendly and enjoyable. A/B testing ultimately helps to build customer loyalty, strengthen your brand’s reputation and attract more customers.

In sales, optimization is paramount, and split testing is a very cost-effective way to gather more useful data about your sales or marketing approach. The calculation is simple: you can use A/B testing to determine whether or not the changes are right for you, before making larger investments. In the long term, split testing saves a great deal of time and money.

Now that you know why you should do split testing, here’s a list of things you can test. 👇

In sales, there are many ways to optimize performance and increase conversions. Here is the complete list:

Split testing sounds good now, doesn’t it? Want to use it right away? Here’s how to do it, step by step 👇

Conversion targets are the most important measure to take into account when planning a split test. They should be sufficient to help you determine whether the variant (or option B) is better than the original. These objectives can be link clicks, call bookings or product purchases.

Some prospecting tools often provide an overview of the areas you can and should optimize. This enables you to collect data more quickly and identify areas for improvement.

By examining the indicators, you can easily find parts of your sales process that should be A/B tested. For example, a poor open rate often leads to a poor response rate. For example, a poor open rate often implies a poorly-written subject line. To start, choose an indicator, find something that causes it and test it.

With split testing, you can use specialized software to make the desired changes to any element you wish to test. This could mean creating a different version of your LinkedIn invitation to connect message, a different email subject line or something else entirely.

Once you’ve created one or more test variants, you need to split the audience sample in two (or more, if there are more variants) and decide who will see variant A and who will see variant B (or more) of your split-test experiment. Some tools, like LeadIn, usually do this for you randomly and evenly to ensure a fair comparison result.

Once you’ve launched the split test, all you have to do is track and measure the results of each version you’ve created. You can, of course, use tools to track conversion rates and overall engagement indicators, for further study and optimization.

The final step in split testing is to analyze the results of your experiment. Now all you have to do is decide which version to adopt. Nothing could be simpler.

Salespeople involved in prospecting use A/B testing to determine whether their prospecting efforts are recognized by prospects or not. You can use these tests for several parts of your sales process. These can include testing sales emails and subject lines, product presentations and demos, follow-up strategies and customer segmentation. Let’s take a look at some of these in detail. 👇

These days, prospecting has mainly shifted to digital. If we use the e-mail as a starting point for split testing, we can test several elements of our cold e-mail. For example, if prospecting isn’t yielding enough results, such as opening rates, we should use split testing to try out different variants of our subject line. If the number of clicks on the links is insufficient, we should consider testing the CTA that leads to that link, or the body of the e-mail, and so on.

To summarize, in the context of e-mail prospecting, we can test 👇

In recent years, sales prospecting has gone digital, and what better place to find potential prospects than LinkedIn – a professional social media platform with nearly a billion users and 63 million decision-makers? If you narrow your audience down to a smaller sample, you can use split testing to determine which social selling techniques work best for your LinkedIn prospecting efforts.

With the help of an automation tool, you can make the A/B testing process much easier. What’s more, if the tool has excellent statistics tracking, your analysis and final decision will be clear. And now, here are all the things you can split-test when it comes to LinkedIn. 👇

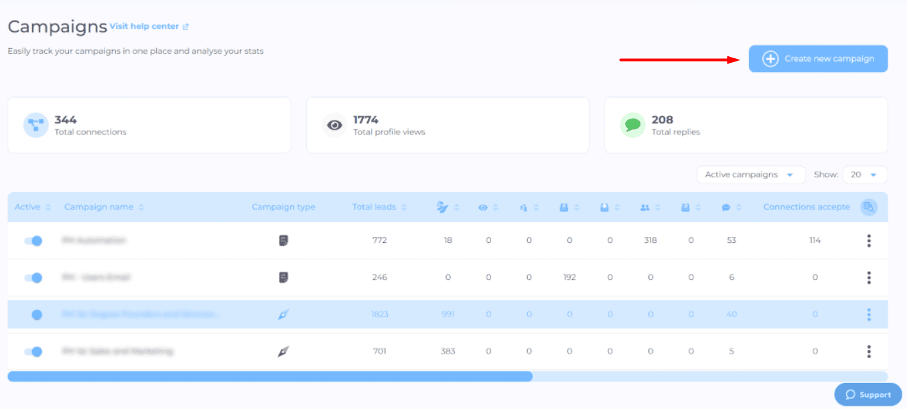

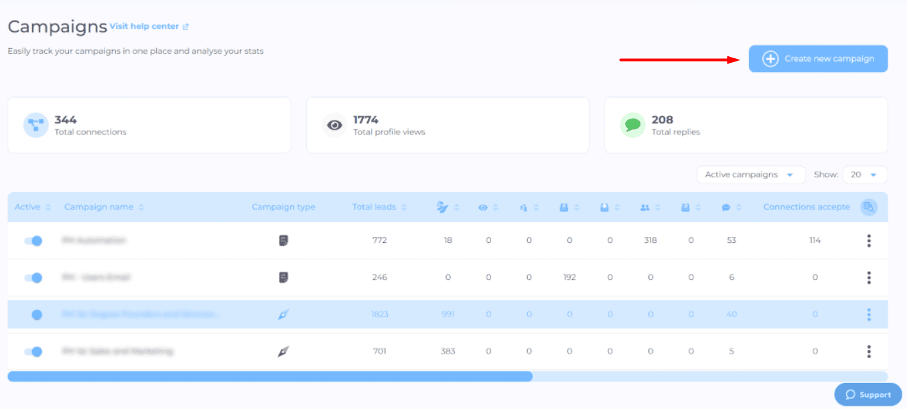

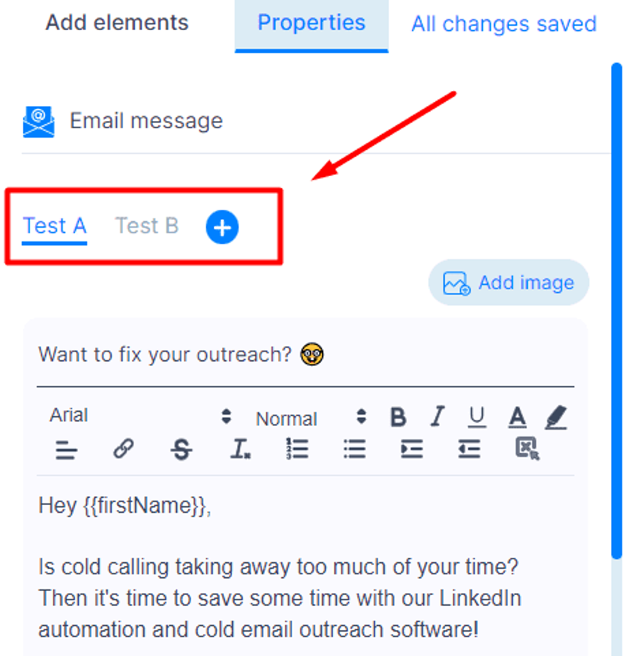

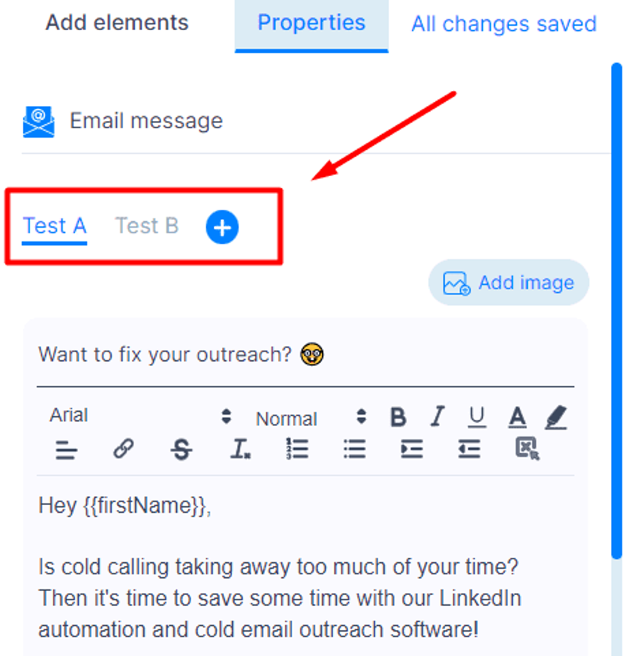

Now that you know where and what to test, the question is which tool to use. We’ve been hinting at it all along, but yes, you can use LeadIn, a LinkedIn automation and cold email software that offers A/B testing functionality. You can use it to test different elements, such as the logo, the company name, etc. You can use it to test different elements of your email and LinkedIn prospecting campaign.

1. Give your campaign a name.

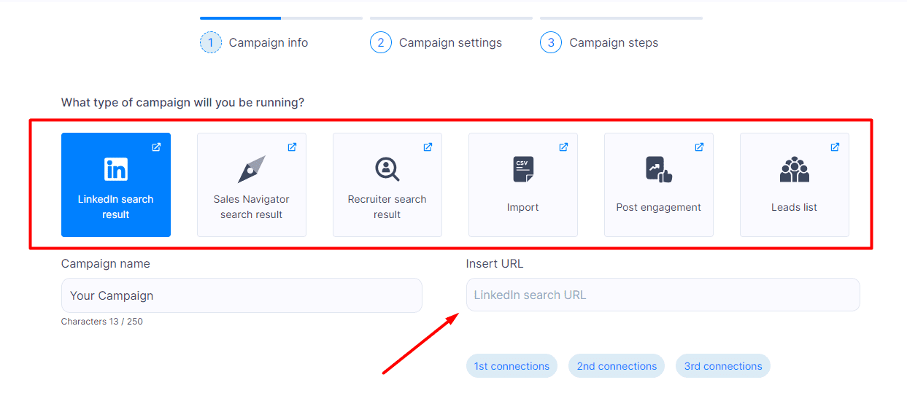

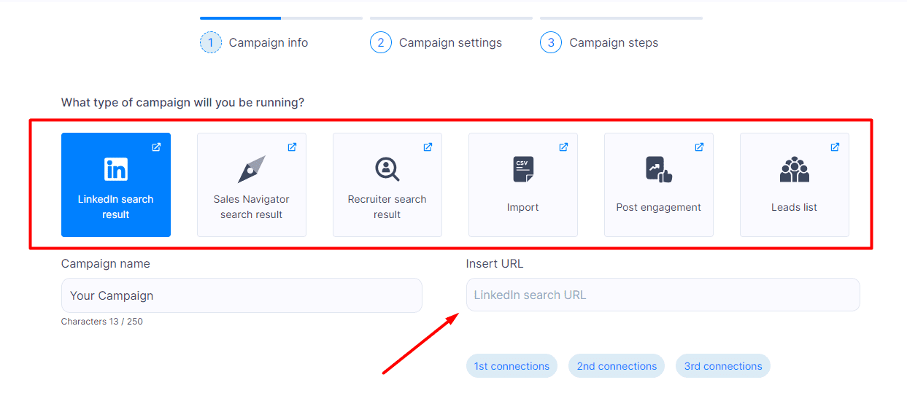

2. Then select your lead source, or the place from which LeadIn will draw the leads.

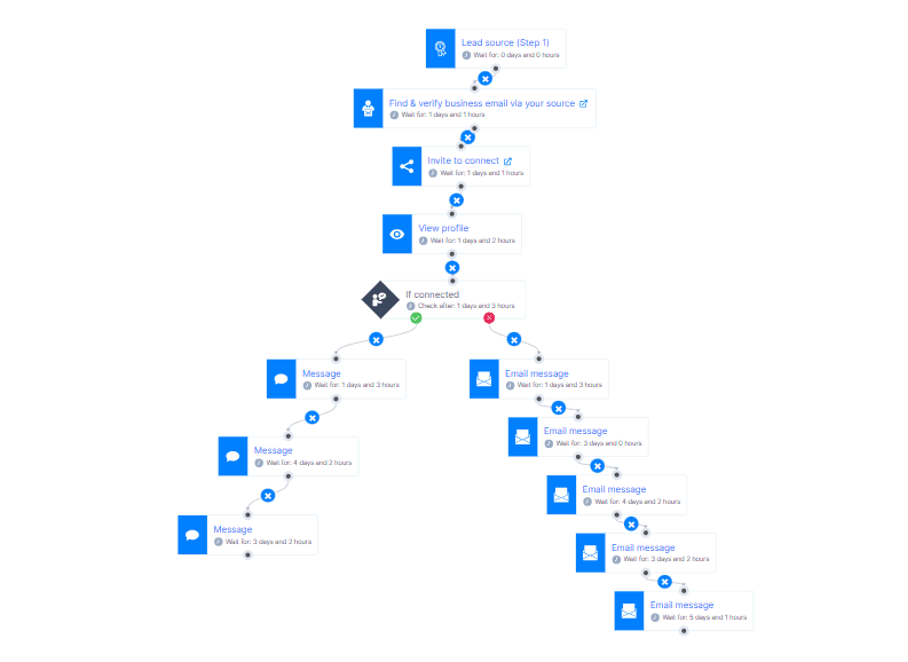

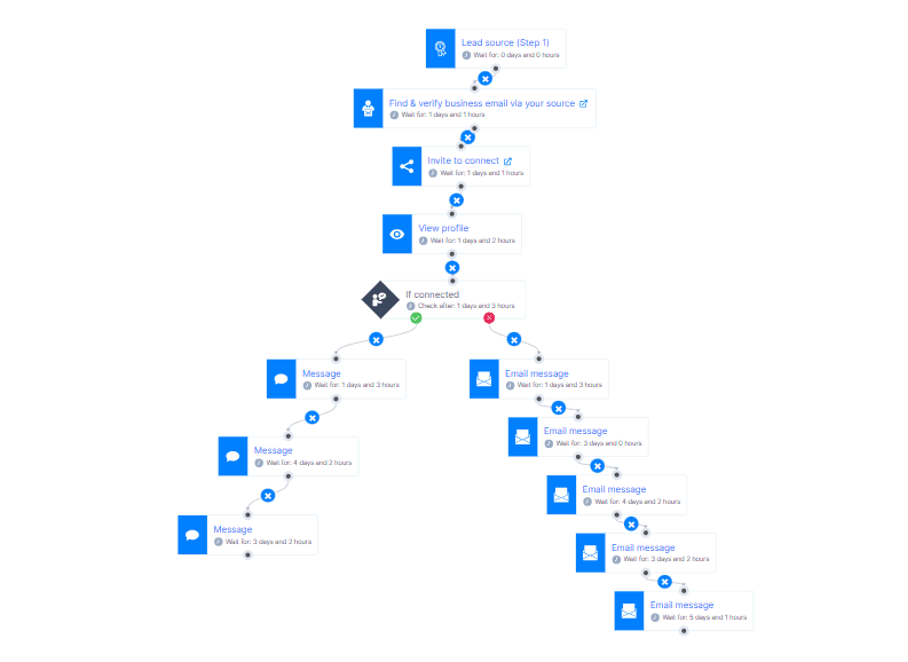

3. Adjust your campaign parameters and click on the “Create sequence” button.

This is how you create your smart sequence – an algorithm that lets you combine “if” conditions with different actions. This way, LeadIn will follow the prospecting flow you create and find the fastest way to your prospect.

Finally, once your campaign is complete, go to the reports page, scroll down to the steps in your sequence and check the results. And There you go !

Here’s your winning item line:

Here’s an overview of everything you can test with LeadIn. ⬇️

A / B test in LeadIn | Results |

Subject line (Emails and InMails) | Play with object line text, length, emoji, caps lock and more. Test the subject line that affects the desired open rate. |

Images and GIFs (Emails, InMails, LinkedIn) | Edit images and GIFs, play with customization, add or modify a single custom element, and more. Check which images and GIFs influence response / conversion rates. |

Writing style (all formats) | Test different writing styles and tones of voice for your target audience to increase response rates. |

Formatting (all formats) | Check whether dividing the message body into several parts, with or without headings, produces the desired results. |

Call to action – CTA (all formats) | Check which call-to-action generates the highest number of conversions. |

Content depth (all formats) | Find out whether your audience prefers long-form content that covers every last detail, or shorter content. |

Invitations to connect | Send out blank invitations to connect, generic or personalized messages to see what people respond to most. |

Paragraphs (all formats) | Modify the key paragraph in the body of your message to check your prospects’ behavior and see if you’re getting the results you want. |

Message body (invitation to connect, LinkedIn message, InMail, email) | Send a completely different message body to see what impact this has on response/conversion rates. |

Time limit | Check whether the delay between messages has an impact on response/conversion rates. |

Connections | Insert different links and see if your prospects are interested. |

Signature | Include in your signature links or other texts likely to increase requests for calls and demonstrations. |

Note: with LeadIn, you can combine LinkedIn and email for personalized multi-channel contact.

You can use A/B testing to determine the pricing model that best suits your target audience. You can even go a step further and experiment with discount offers to see what generates the most sales and revenue.

In sales research, you should always change the style or content of your product demonstrations. You can use split testing to find the most compelling way to present your product’s value to prospects, ultimately leading to more conversions and better overall results.

You can easily see how your target audience reacts to different product formulations and presentations, or service value propositions. Simply divide them into two groups and start A/B testing!

Note: LeadIn automatically separates your leads according to the number of A/B tests you’ve created. For example, if you run 3 split tests, 1/3 of your leads will automatically go into each variant. This way you don’t have to separate your prospects yourself and send them manually to each of the variants.

Sometimes, offering the right combination of products or services can turn a potential prospect into a satisfied customer. The right bundle can have a significant impact on sales and drive them up.

You can use A/B tests and their magic to see how changing the presentation of customer testimonials influences your prospects’ trust and buying decisions.

You can use split A/B testing to experiment with different up-selling or cross-selling methods. There may be a better offer to make to your potential customers that will make them more receptive to your sales pitch.

Before we let you test your sales efforts, we need to highlight a few best practices we’ve experienced in depth ourselves in A/B tests and our own sales actions. ⏬

We’ve said it before and we’ll say it again. Testing too many elements at once will produce misleading results. If, for example, you change the CTA and the email body at the same time, you won’t get clear results on the change your audience has reacted to. We know there’s a lot to test, but your priority should be to identify the weakest part of your approach and start there.

By testing one element at a time, you’ll obtain reliable results. What’s more, there’s no urgency. Once you’ve started optimizing your site, you’ll make significant changes one A/B test at a time, and the results will be inevitable.

Note: based on our experience with A/B testing, we recommend testing both (or more) variants simultaneously.

Many salespeople make the mistake of not giving the split test enough time to produce valid results. If you give up too soon, the results you get will be inconsistent and likely to be misleading.

So don’t forget to give the A/B test enough time to produce useful data and valid results. You can then make data-driven decisions and be sure that the changes you’ve made are worthwhile. Give your A/B test from 2 to 6 weeks to produce results, depending on your sales cycle and industry.

Failure to test different delays between messages can also have an impact on data. Be sure to create different scenarios and timeframes, as these can have a considerable impact on response and conversion rates.

Fractional testing will yield a lot of useful data, because numbers don’t lie. But these figures won’t help you understand why certain actions have been taken. That’s why you need to communicate with your users and get real feedback from them through a survey or poll. This information will certainly help you optimize your site in the future, and better understand your audience.

A/B testing can and should be carried out using automation tools. Why do something manually in this day and age if it’s not necessary? Tools like LeadIn can help you run A/B tests and provide you with accurate analyses that you can easily interpret and make decisions based on real-time data.

You need to consider split testing as a continuous process. You have to do it often and well. In sales, it’s important to constantly improve. Frequent optimization is essential to achieve consistently better results.

You can test almost every element of your sales approach, but if you don’t focus in the right place, you’ll be wasting time and resources for nothing. You need to look at things from your prospect’s point of view and determine what might be causing the problem. Certain indicators can help you understand where the problem lies (opening rate, response rate, bounce rate, etc.).

Testing random elements will only waste your time. Make sure you carry out tests with a clear purpose and objective. A well-defined hypothesis is synonymous with a structured split test, giving you a better understanding of the result and making it much easier to read. Here’s how to establish a good A/B test hypothesis:

If you get impatient and stop the split test too soon, you risk obtaining results that are distorted by temporary fluctuations. It’s only after some time that solid models based on real user behavior can be identified.

However, too long a test duration can also alter the results of A/B tests. If external factors change, such as seasons or market trends and conditions, these results may be useless. Be sure to perform split tests for an appropriate length of time, neither too short nor too long. Give your test group enough time to form models, then make the modifications that correspond to your results.

By “complex”, we mean tests with no clear intention and which go off in all directions. If you find that your email isn’t getting enough responses but its open rate is high, for example, we can determine that the subject line is enticing prospects to open your email, but if they don’t respond, it could be due to the CTA or the body of the email.

As a result, you don’t modify the body of the email and the CTA at the same time – you choose one of the two elements and test different variants for a sufficient period of time. When the results come in and you see that it’s not the body of the email that’s at fault, for example, only then do you proceed to a split test of the call to action. The complexity of A/B testing makes it difficult to obtain clear results, so it’s important to keep things simple.

It would be a big mistake to ignore external factors when examining a split test. Many things can influence your prospects: vacations, current market conditions, trends or even changes to competitors’ tools/products or services. Be sure to include all these factors before interpreting the results. You don’t want to draw the wrong conclusions.

In some cases, fractional test results may not be easy to believe. But why? Sometimes, the clear results of A/B tests don’t match salespeople’s expectations. This is where you need to put your ego and beliefs aside and trust the data. If your split test has been performed correctly, there’s no need to ignore the results. This would only harm you and your business.

As we’ve seen, A/B testing is a powerful tool that, if used properly, can dramatically improve your prospecting results. You can easily find the weakest link in your prospecting through analysis, and use split testing to determine what works best so you can continually optimize your approach for solid results every time. 🔎

Let’s summarize the advantages of A/B testing:

If you prospect on a regular basis, then automating the process is a must. That’s when you need the right tools. Let LeadIn help you optimize your contacts. You can register for a

free trial

for 14 days

and discover all the benefits of LinkedIn and email automation with our A/B testing option.

Launch your first prospecting campaign today!